VisualAcoustic.ai

pioneers physics-anchored intelligence across critical domains:

Quality

Assurance: Physics-Informed Anomaly Detection

Industrial and manufacturing process control,

Biomedical and scientific instrumentation,

Human-assistive and environmental sensing,

and Governance-grade data verification and compliance.

Through its newly filed International Patent Application, the platform unites light

and sound into a single,persistence-anchored framework—delivering measurable,

auditable intelligence where precision, safety, and accountability matter most.

How Phocoustic's features map to human brain functions

Why

Phocoustic Cannot Be Designed Around: A Patent-Defensive Technical

Brief

VisualAcoustic.ai — Revolutionizing sensory technology through proprietary QR-like encoding (XVAM) and advanced physics-informed drift analysis. Combining visible, infrared, and ultraviolet light processing with transformer models, VisualAcoustic creates actionable acoustic insights for industrial, medical, and environmental applications.

December 5, 2025 — Champaign, Illinois — Phocoustic, Inc., the company pioneering physics-anchored perception and cognition, is pleased to announce the filing of CIP-13, the newest continuation in its rapidly expanding global patent family. CIP-13 advances the core VisualAcoustic™ framework by introducing three foundational capabilities: Object Context Identification (OCID), Object-Resolved Drift Lineage (ORDL), and a new class of environmentally conditioned reasoning mechanisms under Semantic Epigenetics (SEGEN).

CIP-13 extends and strengthens the inventions disclosed in CIP-10, CIP-10 ACI, CIP-11, CIP-12, and the recently filed PCT applications, and represents a major step toward fully physics-qualified artificial cognition.

Modern production lines, PCB inspection systems, and sequential conveyor environments share a common requirement: each object must be evaluated independently, with its own drift lineage, history, and admissibility window.

CIP-13 introduces:

A physics-anchored mechanism enabling the system to distinguish which object generated which drift signature in sequential imaging environments. OCID prevents cross-object contamination, ensures deterministic lineage assignment, and enables per-object semantic tracking across time and motion.

A new lineage discipline binding drift signatures to the correct object, enabling:

per-object anomaly evolution,

stable production-line audit trails,

traceability for drift-based material behaviors, and

deterministic semantic inheritance and pruning.

ORDL directly supports both static inspection (PCB, wafer) and dynamic systems (conveyors, robotics), providing the mathematical backbone for physics-anchored auditability.

CIP-13 also introduces SEGEN, the Semantic Epigenetics Generator, which governs how environmental conditions shape semantic development within physics-anchored cognition. SEGEN converts VISURA-captured environmental signals—illumination stability, geometry, drift recurrence, operator cues, multi-camera coherence—into structured epigenetic influence vectors that modulate:

semantic activation thresholds,

reinforcement and suppression rules,

lineage persistence, and

domain-specific specialization without training.

This capability strengthens the PHOENIX developmental engine, the Semantic Genome (SGN), and the broader PACI (Physics-Anchored Cognitive Intelligence) architecture described in earlier filings.

CIP-13 continues the company’s commitment to maintaining the world’s most comprehensive portfolio for physics-anchored perception, inspection, and cognition.

Together with CIP-12 and PCT-2, CIP-13 secures priority for:

physics-structured semantic modulation,

object-resolved inspection logic,

production-line intelligence,

physics-anchored epigenetic control systems, and

deterministic drift-based reasoning frameworks.

CIP-13 further harmonizes public technical disclosures, demonstrations, and investor-facing material with the global patent record.

VisualAcoustic.ai

Announces Major Patent Filings Advancing Physics-Anchored Cognitive

Intelligence

New PCT and CIP applications

establish the global foundation for deterministic, physics-validated

AI systems.

Champaign, IL — December 2, 2025 — VisualAcoustic.ai, a pioneer in physics-anchored cognitive systems, today announced two major patent milestones: the international filing of PCT-2 and the U.S. filing of CIP-12, further expanding the company’s breakthrough technology for safe, deterministic, and physically validated machine cognition.

Together, these filings define the world’s first Physics-Anchored Cognitive Intelligence (PACI) framework — an artificial intelligence paradigm that forms meaning only when real-world evidence is coherent, stable, and measurably grounded in physics, rather than through neural-network training or statistical inference.

The newly submitted PCT-2 application protects a comprehensive architecture for physics-anchored cognition, including:

Physics-Anchored Semantic Drift Extraction (PASDE) — a method for extracting real-world change validated by physical invariants

Pattern-Anchored Drift Quantization (PADQ) — converting physical drift into structured representations

PHOENIX — a staged developmental engine that forms meaning only from physically admissible evidence

Semantic Genome (SGN) — a graph-structured meaning repository with lineage and coherence tracking

VGER (Global Epigenetic Reasoner) — a physics-constrained reasoning engine ensuring deterministic behavior

VASDE Cognitive Architecture — a multi-module system integrating drift extraction, semantic formation, coherence evaluation, and high-level cognition

PCT-2 ensures worldwide protection for VisualAcoustic.ai’s core intellectual property, enabling deployment in manufacturing, robotics, semiconductor inspection, infrastructure monitoring, scientific instrumentation, and visibility-degraded automotive systems.

CIP-12 builds on earlier filings by introducing the field of Developmental Semantics, formalizing how a physics-anchored cognitive engine can grow, refine, and stabilize semantic understanding over time.

Key innovations include:

PHOENIX developmental stages, establishing a structured semantic maturation process

Expanded Semantic Genome (SGN) with lineage, branching, and long-horizon coherence

Physics-Anchored Meaning Formation (PAMF), enabling deterministic meaning formation without datasets or gradient descent

PASCE: Physics-Anchored Semantic Coherence Engine, ensuring semantic activation is physically admissible

Domain-specific embodiments across PCB electronics, semiconductor wafers, robotics alignment, and fog-impaired navigation

CIP-12 provides a legally robust framework for deterministic semantic growth, enhancing safety, explainability, and long-term reliability.

“Today’s neural networks make statistical guesses. They don’t know the world — they approximate it,” said Stephen Francis, founder and inventor of VisualAcoustic.ai. “Our system does the opposite. It forms meaning only when the physical world provides stable, measurable evidence. These filings solidify the scientific and legal foundation for a new class of AI that never hallucinates, never fabricates, and never escapes physics.”

VisualAcoustic.ai’s architecture is built for applications where safety, transparency, and physical correctness are paramount: semiconductor lithography, industrial inspection, structural monitoring, robotics, advanced sensing, and automotive intelligence in fog, night, and glare.

With PCT-2 and CIP-12 now filed, VisualAcoustic.ai holds one of the most comprehensive patent portfolios in physics-grounded artificial cognition. These filings serve as a global foundation for future expansions of the technology, including additional CIP applications and forthcoming prototypes demonstrating deterministic cognition in real-world conditions.

VisualAcoustic.ai is a research and development company focused on building cognitive systems grounded entirely in physical evidence. Using multi-modal sensing, drift physics, photonic measurements, and structured developmental semantics, the company is pioneering a new class of deterministic, safe, and transparent artificial intelligence systems for high-value scientific and industrial domains.

For more information, visit: https://visualacoustic.ai

The Physics-Anchored Cognitive Intelligence (PACI) architecture developed by VisualAcoustic.ai is fundamentally different from neural-network systems, statistical inference engines, or conventional sensor-processing pipelines. Because PACI is built on physics-first constraints, drift-governed evidence, and interdependent developmental semantics, the architecture cannot be reconstructed, partially copied, or substituted without collapsing the functions protected by VisualAcoustic.ai’s patent family.

Many AI systems can be approximated or replicated using open-source libraries and trained models. PACI cannot. The architecture is structurally indivisible: every module depends on the physical correctness of the preceding module. Any attempt to replace one component with a lookalike (e.g., a CNN, autoencoder, or heuristic filter) destroys the physics-anchored semantics that the upper layers require.

At the base of the system, Physics-Anchored Semantic Drift Extraction (PASDE) captures only those changes that satisfy optical, geometric, temporal, and coherence constraints. PASDE does not “perceive” in the machine learning sense; it enforces physical admissibility. If PASDE is replaced with a learned model or synthetic feature extractor, the system no longer interprets real-world drift—it interprets the model’s internal biases. This breaks all dependent claims and instantly invalidates any design-around as a PACI equivalent.

Pattern-Anchored Drift Quantization (PADQ) then transforms physically admissible drift into quantized signatures that encode recurrence, persistence, inter-frame coherence, multi-angle agreement, and physical stability. These representations are not embeddings, not vector features, not latent codes. They are physics-validated quantized drift primitives. There is no statistical analogue. Any substitution—no matter how “clever”—fails to produce the structural invariants that the downstream developmental engines require.

On top of PADQ, the PHOENIX developmental engine constructs meaning through a gated, staged semantic maturation process. PHOENIX does not classify; it develops. Its semantics emerge only from persistent, multi-step drift validation, not from pattern recognition or inferential shortcuts. A design-around that bypasses PHOENIX or substitutes it with a learning-based model breaks the developmental semantics patents and cannot replicate the system’s operational behavior.

The Semantic Genome (SGN) provides verifiable lineage and structural coherence for all semantic units. SGN is not a database or a graph neural network; it is a physics-anchored semantic structure that stores the exact drift history, provenance, branching logic, and coherence conditions from which each meaning arises. No ML system can generate or store this lineage, because learned features do not represent physical cause-and-effect relationships.

At the reasoning level, VGER (Global Epigenetic Reasoner) ensures that no semantic inference is accepted unless it is justified by a complete, drift-validated causal record. Replacing VGER with any learned inference component destroys traceability, safety, and determinism—again breaking equivalence to PACI and excluding any potential workaround from the protected domain.

Most importantly, the PACI architecture is tightly coupled:

PASDE feeds PADQ.

PADQ feeds PHOENIX.

PHOENIX feeds SGN.

SGN feeds VGER.

Any intervention—at any layer—prevents downstream modules from operating, rendering the system non-functional. This is intentional and fully protected under VisualAcoustic.ai’s patent filings.

PACI is not an architecture that invites imitation. It is a closed-loop cognitive ecosystem, scientifically grounded, legally defended, and structurally impossible to replicate through modular substitution. Any workaround ceases to be PACI and fails to deliver the safety, determinism, or semantic integrity that define the system.

ACI –

Artificial Conscious Intelligence

A physics-anchored cognitive framework that activates only when drift

evidence is coherent, stable, and physically admissible.

ASD / PASDE

– Physics-Anchored Semantic Drift Extraction

Extracts physically persistent drift using multi-frame, dual-domain

checks that reject noise and non-admissible change.

CAP –

Cognitive Activation Potential

A composite stability score predicting whether validated drift may

produce semantic or task-level meaning.

CCE –

Conscious Coherence Envelope

A multi-dimensional boundary confirming drift resonance, persistence,

and admissibility before higher cognition can activate.

CCSM –

Cross-Camera Semantic Memory

Fuses drift and meaning across multiple VISURA viewpoints into a

unified, contradiction-free consensus.

CRO /

CRO-S – Cognitively Registered Observation (Stable)

A meaning-ready drift state that passes admissibility, resonance, and

persistence checks.

CSW –

Conscious Stability Window

A required timeframe of uninterrupted physical coherence before ACI

can activate.

DDAR –

Dynamic Drift-Admissibility Reference

A rolling physics-anchored reference that updates only when drift

satisfies strict admissibility gates, ensuring safe adaptation under

dynamic conditions.

DRMS –

Drift-Resolved Material Signature

A stable drift fingerprint revealing material state, micro-defects, or

surface condition across frames.

DRV –

Drift Resonance Vector

Encodes harmonic, amplitude-phase stability between EMR and QAIR

domains.

DRV-P –

Photoelectric Drift Resonance Vector

A resonance vector derived from photoelectric emission patterns,

capturing work-function shifts and nanoscale change.

ECE –

Electromagnetic Continuity Encoding

Treats all matter as electromagnetically distinguishable, enabling

universal drift extraction across sensing modalities.

EMR –

Electromagnetic Radiation Layer

Primary optical, IR, and UV input stream used as the foundation for

drift extraction.

GDMA –

Golden Drift Match Algorithm

A precision algorithm comparing live drift patterns against

golden-image drift profiles for high-resolution inspection.

GEQ –

Golden-Edge Quantization

Quantizes canonical edge structures from golden captures, enabling

stable drift comparison even under viewpoint or lighting changes.

INAL –

Intent-Neutral Awareness Layer

A supervisory layer monitoring resonance lineage without producing

meaning or influencing decisions.

MIPR-A –

Meaning-Indexed Perceptual Representation

Grounds textual and symbolic cues (e.g., “DANGER 480V”) into

measurable perceptual geometries that influence risk scoring.

MSC –

Multi-Scale Consistency

An internal validation measure confirming that drift coherence

persists across multiple spatial and temporal scales.

MSDU –

Minimum Semantic Distinguishability Unit

The smallest drift difference reliably detectable and meaningful to

the system.

PACF –

Physical-Admissibility and Contradiction Filter

Rejects interpretations that violate physics, lineage, directionality,

or sensor coherence.

PADR –

Physics-Anchored Drift Reduction

Filters turbulence and highlights physically persistent drift across

sequential frames.

PA-CI –

Physics-Anchored Conscious Intelligence

A meta-stable cognitive state where all resonance, polarity, and

coherence conditions overlap.

PBM –

Physics-Based Masking

A mask-generation technique isolating drift-bearing regions without

neural segmentation.

PERC –

PhotoElectric Resonance Coherence

A photoelectric sensing pathway providing early micro-defect detection

and reinforcing resonance validation.

PEQ-AGI –

Physics-Evidence-Qualified AGI

High-level reasoning bounded to physically verified drift evidence.

PQRC –

Pattern-Quantized Ranking Code

Encodes drift direction, magnitude, and lineage as structured, ranked

arrow-matrix frames.

PSSS –

Project-Specific Semantic Sphere

Maps PQRC structures into task-specific semantic categories.

PSYM –

Persistent Semantic Overlay Module

Renders drift vectors, anomalies, and classifications on the display.

QAIR –

Quantized Acoustic-Inferred Representation

Transforms EMR into an acoustic-like domain for dual-domain drift

confirmation.

QASI –

Quantum-Anchored Semantic Invariant

A quantum-stable vibrational signature used for drift validation.

QCCE –

Quantum-Constrained Coherence Envelope

An extension of CCE integrating quantum-conditioned drift constraints.

QDP –

Quantum Drift Partition

Discrete drift packets derived from Raman or fluorescence transitions.

Q-DRV –

Quantum-Derived Resonance Vector

Captures quantized spectral transitions and coherent quantum drift

behavior.

QLE –

Quantized Ledger Entry

An immutable record of drift events, decisions, and system actions.

Q-RDCM –

Quantum-Resonant Drift Coherence Model

Fusion model combining classical and quantum drift resonance inputs.

Q-SCVL –

Quantum Self-Consistency Verification Loop

Quantum-augmented internal validation cycle confirming cross-domain

coherence.

QLSR –

Quantized Laser-Structured Recall

Structured-light grid storing spatial drift anchors for long-horizon

recall.

RDCM –

Resonant Drift Coherence Model

Evaluates EMR→QAIR harmonic stability to confirm admissible drift.

RSG –

Resonant Stability Geometry

A geometric representation of stable resonance relationships across

frames.

SGB –

Static Golden Baseline

A fixed drift reference profile for controlled, precision domains.

SCVL –

Self-Consistency Verification Loop

Closed-loop check ensuring drift, semantic state, and resonance remain

contradiction-free.

SOEC –

Snapshot Optimization Execution Control

Validates drift persistence across frames, confirming stable

anomalies.

SPQRC –

Sequential Pattern-Quantized Ranking Code

Encodes multi-frame drift evolution for lineage tracking.

SPSI –

Semantic Polarity Stability Index

Evaluates directional drift consistency to confirm valid semantic

interpretation.

SVDM –

Structured-Visibility Drift Mapping

Visibility-aware drift mapping for fog, glare, smoke, and occlusion.

T-PACF –

Task-Bound PACF

Restricts reasoning to operator-approved intent and safety rules.

UDV –

User-Defined Variable

Operator-tuned parameter guiding thresholds, risk scoring, and alert

behavior.

VASDE –

VisualAcoustic Semantic Drift Engine

The full physics-anchored perception and cognition stack.

VISURA –

Visual-Infrared-Structured-light-Ultraviolet-Acoustic Layer

The multi-modal acquisition layer generating raw EMR for drift

extraction.

XVT –

Transformer-Based Semantic Interpreter

Converts PQRC and drift signatures into contextual decisions and

alerts.

We proudly announce the filing of the VASDE Transformer Architecture (XVTA) provisional patent for real-time semantic drift detection and anomaly classification.

XVTA: Real-time reasoning. Structured drift. Adaptive intelligence.

Patent pending protection for the core VisualAcoustic platform engine, transforming VISURA multispectral signals into structured, acoustic-encoded PQRC frames for real-time anomaly detection, semantic drift recognition, and AI optimization.

VisualAcoustic Semantic Drift Engine (VASDE): Physics-First Anomaly Detection

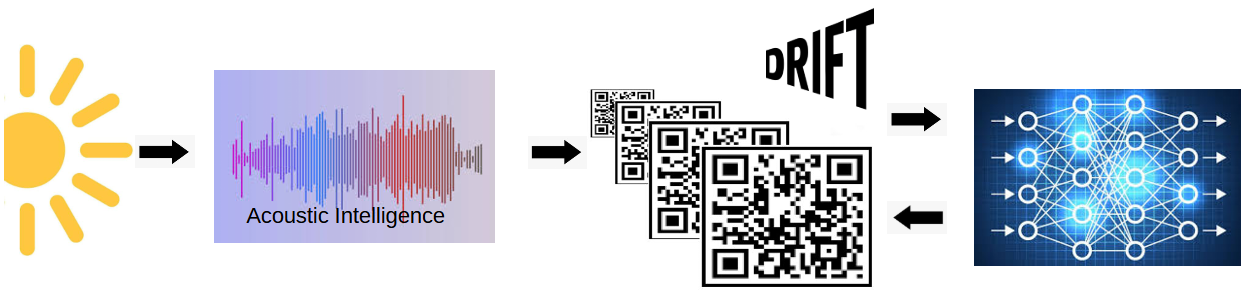

Phocoustic’s VisualAcoustic Semantic Drift Engine (VASDE) represents a new class of anomaly detection technology. Instead of relying solely on statistical deep learning or brute-force computer vision, VASDE encodes raw sensor data into a physics-informed representation that makes subtle instabilities visible and explainable.

At its core, VASDE treats visual data not just as pixels but as structured wavefields. Images and video streams are first decomposed into energy and phase patterns — a step that converts light intensity changes into a kind of “acoustic drift” signature. This transformation acts like “bottling light,” preserving both the magnitude and direction of micro-changes that would otherwise be lost in conventional imaging.

Once signals are converted into these drift-based representations, VASDE applies quantization and ranking processes designed to suppress cosmetic noise while preserving persistent deviations. For example, minor surface scratches or lighting fluctuations are filtered out, while genuine instabilities such as the onset of a crack, bubble formation in slurry, or solder fatigue on a PCB pin are reinforced.

A key differentiator is persistence gating. Traditional machine learning models often fire alarms on transient spikes or require massive training datasets to learn what “normal” looks like. VASDE instead uses temporal drift windows — ensuring that only anomalies that remain consistent across multiple frames are escalated. This mirrors how a human operator would “trust their eyes” only after confirming that something is not just a flicker or glare.

The outputs of VASDE are encoded as directional glyphs and semantic prompts. Directional glyphs are vector-like overlays that show where drift is growing and in what orientation. Semantic prompts convert those glyphs into human-readable or machine-readable labels, enabling integration with dashboards, industrial control systems, or higher-order AI models. In this way, VASDE does not replace AI — it grounds it. A downstream transformer, for instance, can be guided by VASDE’s evidence trail rather than guessing from raw pixels.

This architecture makes VASDE domain-agnostic. It has been demonstrated in use cases ranging from semiconductor wafer polishing (detecting slurry-driven turbulence before yield is compromised), to PCB solder line inspection (spotting early pin cracks invisible to the naked eye), to advanced driver assistance systems (stabilizing perception in fog or glare).

Equally important, VASDE is explainable by design. Each anomaly is accompanied by drift statistics, thresholds, and visual overlays. Operators can see why an alarm fired, not just that it did. This transparency addresses a major shortcoming of black-box deep learning systems and helps build trust in critical environments where false positives or missed alarms can be costly.

In summary, VASDE combines wave-based physics with semantic reasoning to create a new form of anomaly detection: one that is faster, more explainable, and less dependent on massive training datasets. By “bottling light” into acoustic-like drift signals, VASDE reveals the hidden instabilities that precede defects, providing industries with a tool to prevent failures before they occur.

VASDE in Action

Below is a real sequence from the Phocoustic platform inspecting a

printed circuit board.

This pipeline demonstrates how Phocoustic “bottles light” into physics-informed signatures that expose defects before they become failures. Unlike conventional systems, no massive datasets or retraining are required — anomalies emerge directly from the underlying signal physics.

This pipeline demonstrates how Phocoustic “bottles light” into physics-informed signatures that expose defects before they become failures. Unlike conventional systems, no massive datasets or retraining are required — anomalies emerge directly from the underlying signal physics.

Investor Note: While many inspection systems depend on brute-force deep learning or edge-trained models, VASDE’s approach is fundamentally different. Its physics-informed architecture creates proprietary representations that cannot be replicated by simply training another neural net. Workarounds using brute force AI remain expensive, data-hungry, and less explainable — leaving Phocoustic uniquely positioned to scale into industries that demand precision and trust.

At Phocoustic, we believe the future of quality assurance and safety lies in moving beyond brute-force machine learning. Current anomaly detection systems rely heavily on massive datasets and deep learning retraining cycles. They can be powerful, but they are also fragile: retraining is costly, explanations are opaque, and results often fail to transfer between domains.

Phocoustics introduces a different paradigm: Visual-Acoustic Semantic Drift Engine (VASDE). Instead of trying to memorize millions of past examples, VASDE converts fresh sensor data into a structured, physics-informed representation that makes anomalies immediately visible and explainable.

Light carries rich information — in its intensity, color, and how it changes over time. VASDE treats this optical signal not as static images but as a dynamic, wave-like process. Using proprietary transformations, these signals are converted into an acoustic-like representation. In other words, light is “bottled” into a format that preserves its rhythms, shifts, and drifts in ways that resemble the way sound can be analyzed.

This step is not literal sonification. It is a mathematical reformulation that allows us to apply advanced tools from signal processing and physics — tools that were previously unavailable to vision alone.

Once the light signal is bottled, VASDE organizes it into highly structured frames. Inspired by robust error-correcting codes (like QR codes), these frames package anomaly-sensitive information into a standardized, repeatable format. Each frame is a compact summary of change, stability, and drift.

This design makes the data machine-readable at a glance: AI models do not have to search blindly across millions of pixels. Instead, the critical information is exactly where it needs to be, every time.

Anomalies emerge as semantic drift — patterns that persist, shift, or break in ways that the structured frames reveal instantly. Unlike brute-force AI, VASDE does not require retraining on every new defect type. Because the physics of drift is universal, the same pipeline can flag emergent anomalies on a PCB line, in a wafer fab, or in a foggy driving scene.

Where traditional AI would ask: “Have I seen this defect

before?”

VASDE asks: “Does this signal behave as physics says it should?”

Structured frames can be passed into standard AI tools, including large language models, to produce human-readable inspection notes, compliance reports, or alerts. Because each anomaly is tied back to a physical drift signature, operators remain in control. The result is not a black box but a transparent, physics-anchored decision chain.

By bottling light into acoustic-like, structured frames, Phocoustics creates a bridge between raw sensors and explainable AI. This approach offers:

Traditional optical systems—even the most advanced machine-vision or interferometric platforms—share a common foundation: they use light to measure change. Whether that change appears as brightness, reflection, phase, or wavelength, the measurement remains confined to optical intensity and its numerical derivatives. These approaches are inherently limited by lighting conditions, calibration drift, and the statistical noise that accompanies every optical comparison.

Phocoustic’s VisualAcoustic Semantic Drift Engine (VASDE) changes the category entirely. Instead of comparing light to light, VASDE translates light into an acoustic-inferred domain where change is measured through physical persistence and resonance rather than raw intensity. The result is a new class of measurement—quantized acoustic inference—that detects stability, drift, and semantic meaning across time. Because the system no longer depends on optical correlation, it bypasses the vulnerabilities of conventional “light-based change detection.” In patent terms, this positions Phocoustic’s method as a distinct and non-overlapping domain of invention, offering a legally defensible and technically superior alternative wherever light is used to measure change.

At its core, VASDE represents a novel fusion of photonic and acoustic principles, linking the behavior of light with the physical logic of resonance and persistence. By transforming optical data into structured acoustic analogs, the system bridges two historically separate domains of measurement—visual sensing and acoustic inference—within a unified quantized framework. This cross-modal integration allows VASDE to interpret change as both a physical and semantic event, grounding every anomaly in measurable persistence rather than probabilistic inference. Through this fusion, Phocoustic establishes a coherent scientific pathway for interpreting light as structured resonance, enabling applications that extend beyond traditional optics without departing from established physical laws..

Phocoustics represents a shift from brute-force learning to physics-first understanding. Just as a medic bottles blood to analyze it for disease, VASDE bottles light into structured frames that expose anomalies before they escalate. This is anomaly detection that is not only powerful, but also explainable, efficient, and ready for the real world.

Phocoustic’s

VisualAcoustic Semantic Drift Engine (VASDE) does not

compete inside the traditional boundaries of optical imaging,

machine-learning inference, or deep-learning anomaly detection.

Instead, VASDE defines a new,

classical, physics-anchored measurement domain that

converts electromagnetic sensor data into structured acoustic-like drift representations

with persistence, admissibility, and lineage safeguards.

This architecture creates clear separation from several crowded—and highly litigated—patent classes:

Traditional approaches rely on:

convolutional/deep networks,

transformers,

learned representations,

pixel-space classification or segmentation.

VASDE diverges completely:

Drift quantization (PADR) occurs before any semantic interpretation.

PQRC/SPQRC frames encode change as governed physical drift, not statistical features.

No dependency on trained ML models for anomaly scoring.

Physics-anchored semantics reduce overlap with image-model patents.

This places Phocoustic outside the scope of most computer-vision and deep-learning IP.

Many patents center on:

optical band ratios,

reflectance features,

hyperspectral cube analysis,

wavelength-domain classification.

VASDE’s domain is different:

Optical measurements are treated as physical waveforms, not as pixel matrices.

The system performs dual-domain transformation into QAIR (acoustic-structured numerical domain).

Drift encoding occurs after conventional EMR capture, bypassing traditional optical-processing claim space.

QDP/QASI and PERC structures are derived from classical measurements of quantum-origin effects, not hyperspectral statistics.

As a result, VASDE falls outside many optics-based patent families.

Traditional systems—whether rules-based or ML-based—typically use:

raw sensor thresholds,

FFT/STFT analyses,

statistical change-detection,

feature extraction pipelines.

VASDE introduces new elements absent from these frameworks:

Persistence-Anchored Drift Reduction (PADR)

Resonance-Driven Coherence Models (RDCM / Q-RDCM)

Semantic drift encoding via PQRC/SPQRC

Self-Consistency Verification Loops (SCVL / Q-SCVL)

CCE/QCCE admissibility envelopes

These mechanisms create a novel evidence-governance architecture not covered by classical signal-processing patents.

Many XAI patents focus on:

post-hoc model visualization,

dependency graphs,

saliency layers,

or audit trails for neural-network inference.

Phocoustic separates itself entirely:

Explainability is native, not post-hoc.

Drift signals are physics-validated, not probabilistic.

Quantized Ledger Entries (QLE) record decision provenance at the evidence level.

User-Defined Variables (UDVs) and operator-intent gating guarantee transparent semantics, not opaque model reasoning.

This creates a non-overlapping claim space distinct from neural-XAI and regulatory logging patents.

VASDE’s architecture—built on physics-anchored drift quantization, dual-domain resonance mapping, multi-layer admissibility envelopes, and classical quantum-conditioned measurements—places Phocoustic outside many saturated patent classes and creates a distinct, defensible, and enforceable IP zone centered on governed physical change rather than classical imaging, ML inference, or statistical signal analysis.

These domains are described for educational and comparative purposes only and do not constitute a guarantee of non-infringement or legal freedom to operate. Actual patent clearance depends on final claim language, examiner findings, and ongoing portfolio development.

The technologies described on this website are protected by one or more U.S. and international patent applications owned by Phocoustic Inc., including its Continuation-in-Part filings (CIP-4 through CIP-10) and related provisional and non-provisional applications. Additional filings, including CIP-11, are planned to further expand and clarify the patent family.

These filings cover innovations in:

Dual-Wave Coherence (DWC) and EMR→acoustic dual-domain transforms

Persistence-Anchored Drift Reduction (PADR) and multi-frame physical drift validation

Adaptive Resonance Calibration (ARC) and Resonance-Driven Coherence Models (RDCM / Q-RDCM)

Quantum-Conditioned Drift Extensions (QDP, QASI, QCCE, Q-SCVL) based solely on classical measurements of quantum-origin physical phenomena

PhotoElectric Resonance Coherence (PERC) and DRV-P drift-vector formation

Phocoustic ACI Stack, including PASDE, PASCE, PACF, PA-CI, and Physics-Evidence-Qualified AGI (PEQ-AGI)

Phocoustic Governance Stack (CAF → CPL → OAL → CRE → PLM → QLE)

VISURA-based multi-modal acquisition and physics-anchored semantic mapping

All systems described operate entirely in the classical

domain.

References to “quantum-conditioned” drift refer only to classical sensor measurements

whose underlying physical behavior arises from quantized

interactions (e.g., Raman shifts, fluorescence lifetimes,

photoelectric emissions). No quantum computing or quantum

manipulation is performed or required.

All information presented on this site is provided for general educational, explanatory, or marketing purposes only. Nothing herein grants, nor should be construed as granting, any license or rights under Phocoustic’s patents, pending applications, copyrights, trademarks, or trade secrets.

Any unauthorized use, reproduction, reverse engineering, or modification of Phocoustic’s patented or patent-pending technologies is strictly prohibited.

© Phocoustic Inc. — All Rights Reserved.

© 2025 Phocoustic Inc. All rights reserved.

Phocoustic®

is a registered trademark of Phocoustic Inc. in the United States

and other jurisdictions.

VisualAcoustic.ai

and the Phocoustic logo are trademarks or service marks of

Phocoustic Inc.

PhoSight and PhoScope are proprietary technology and product names used by Phocoustic Inc. to describe internal demonstration systems and related embodiments of the Phocoustic platform. They are not registered trademarks at this time. All other names, logos, and brands mentioned on this site are the property of their respective owners. Use of these names or references does not imply any affiliation with or endorsement by the owners.

All content and materials on this website, including text, images, videos, and downloadable documents, are protected under applicable copyright, patent, and trademark laws. Reproduction or redistribution of any material without express written permission from Phocoustic Inc. is prohibited.

VisualAcoustic.ai is the public-facing demonstration site for Phocoustic, Inc., showcasing our breakthrough work in physics-anchored anomaly detection, drift-based sensory intelligence, structured semantics, and the foundations of safe artificial cognitive intelligence.

This site will host demo videos, press releases, wafer/PCB examples, structured-light explorations, and summaries of our patent filings including CIP-10, CIP-11, CIP-12, and CIP-13.

Coming soon. You may embed YouTube demos or local videos here.